I recently spoke at the Agile In the City London conference. As well as watching a number of great presentations, I also was lucky enough to be able to give two presentations and I’ve shared the slides below.

I recently spoke at the Agile In the City London conference. As well as watching a number of great presentations, I also was lucky enough to be able to give two presentations and I’ve shared the slides below.

tl;dr No-one really likes performance reviews. But giving your team members good, actionable feedback and setting clear direction with them is very important. In my team we changed how we did performance reviews.

Note: This is the third post in a series on performance management and review. It’s worth reading the other two first:

In part two of the series I talked about how my team and I took the performance review and feedback system that is used at Atlassian and adapted it to suit our groups context. I talked about the themed ‘checkin’ sessions and how we got the whole group working to a common timeline for review and feedback, and the advantages that brought.

We what happened? How were the changes received and what was the feedback from the teams?

Once we were clear on our direction, we had agreed as a leadership team how we wanted the process to work and what themes we were going to use, then we prepared to sell our idea to the rest of the team. I strongly believe that one should not dictate changes that affect people’s career development or relationship with their manager, and so it was critical to us that the team members were bought into the ideas of the changes and our reasoning for proposing them.

We gathered the team together and explained the new process and how we felt it benefited them. I explained how I felt that the traditional process was sub-standard and could be improved. The key messages were this:

Feedback is better when it’s timely and so we want to start a process where that timely feedback enables higher quality discussions about you

As a group we agreed to run the process for one cycle and then review the results. If, after that, the teams were happy to continue then we would continue and if not then we would stop and revert back to the company-wide yearly cycle.

The issue with selling an idea to a group is that often, in private, people will express their reservations more freely. So it was key to ensure that managers then spent time with their team members and gave them the opportunity on a one-to-one basis to discuss the new process. This was also a good opportunity for managers to explain the process in more detail and seek additional buy-in.

The checkin sessions themselves are intended to be 30 minutes long in order to ensure that the process does not take too much time every month. It ensures that only the valuable things are discussed, and the sessions can be to the point and targeted at what matters. However, since this was a new process then we ensured that the first few checkin sessions ran to an hour so everyone could get the hang of it.

Since checkin sessions were short and punchy – although not literally 🙂 – then it was critical that everyone prepared for them. The checkin’s process works if the team member comes to the session having already prepared what they want to talk about. In order for them to be able to do that then we needed somewhere that they could document their thoughts.

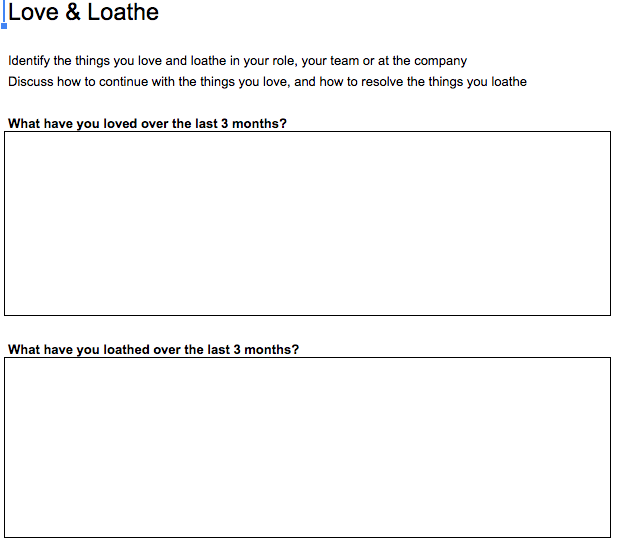

Being an MVP then we did not want to spend much on supporting tooling (there is an excellent tool called Small Improvements which supports this sort of process) so instead we designed our own form. It’s simple and includes questions that the team member should answer in order to prepare for the session. The idea is that they fill in the relevant tab before each session, the manager can then review and prepare based upon the information they’ve entered, and then the checkin session itself can be focused on the discussion and actions from that discussion, rather than the actual ‘thinking’ time. It allows sessions to be targeted and valuable.

We designed our own form to support the process. If you’d like to see a copy then get in touch. We used the questions below to drive discussions:

Another advantage of using the form was that each team member built up a record of their year, what they had done, and how they had performed. This meant that when we did need to provide yearly feedback into the main company process then it was simply a matter of extracting the information we already had in each form. It was also great to encourage team members to look back through their forms to see what they had achieved throughout the year.

By meeting with our team members every month for themed, targeted performance and career management discussions then it also meant that we had a great opportunity to give them quantitive feedback. Like a lot of companies, we use a four point scale and at the end of each year every employee receives a performance review score which has an impact on salary and promotion – standard stuff in most companies. Once per year with no indication in-between how they are performing relative to that four point scale.

This seemed unfair so we adapted the checkins process to also include giving the team member feedback each more on how they had performed against the scale and why. This helped solve multiple complaints against a yearly system:

However, merely getting a feedback score from the manager still presents a surprise and it was important that the manager was able to have a meaningful two-way discussion about performance. In order to drive this we asked each team member to give themselves a score, on the same scale, before the session. This enabled the manager to then understand how the team member felt they had performed, and it encouraged the team member to think about their achievements during that month. The discussion, held towards the end of the checkin session, could then be focused on any differences between the manager and team members interpretations of their performance.

The first rounds went smoothly. One thing we learnt pretty quickly was that it took time to adapt to the process and so running the first couple of checkins for each team member as hour long rather than 30 minutes was definitely necessary. There was also some prompting required of some team members in order to get the forms filled in prior to the checkin session rather than during the session but this was to be expected given that the process was different and new.

People were surprisingly happy to provide a feedback score for themselves and by asking them to do so, we were able to make more meaningful and example based discussions on how they had performed.

As we had promised, we ran the process for four checkins, i.e. one cycle and then sought feedback from the team. Broadly speaking it looked like this:

We now had some feedback to work with. Overall the experiment had been a success and was worth continuing with, albeit with some small changes. Overall we had:

In the last article in this series I’ll explain what we did in order to improve the process to suit our context even better, and some of the key learnings we got from running the process over a longer time period.

Survey’s like this are really important to our community, as they give a view on where we are, our challenges and successes, and a great starting point for more discussion and potential improvement.

The survey is open and you can find it here. I’ll be filling it in, will you?

Something different for the blog this time – I’ve been reading a new book that Daniel Knott has published on Leanpub. It’s called ‘Hands-On Mobile App Testing‘ and it’s intended to give a good start to those in the software testing industry who are getting started in mobile, as well as those who have been in the area for a while. In fact Daniel himself describes the book as:

“A guide for mobile testers and anyone involved in the mobile app business.

Are you a mobile tester looking to learn something new? Are you a software tester, developer, product manager or completely new to mobile testing? Then you should read this book as it contains lots of insights about the challenging job of a mobile tester from a practical perspective.”

It’s a very well researched book and Daniel has clearly put a lot of time and effort into writing it. As someone who also trains testers who are getting started in mobile then I liked the logical flow through the chapters. The book starts with a chapter explaining why mobile is different and setting the scene for more specific areas that are re-visited in more detail in subsequent chapters. I particularly liked the section “Mobile Testing Is Software Testing” because it’s important to note that testing on mobile is complicated and that should not be overlooked.

The book contains a lot of specific technical information relating to mobile, meaning that it also serves as an interesting read for someone who may want to know more about the subject but not necessarily apply specifically to testing. The section on business models, for example, is certainly widely applicable. Keeping the information in the book updated may well be a challenge, given the pace of change in the mobile world, but by using Leanpub I hope that’s something that Daniel will do.

I appreciated the focus that the book puts on the customer; in mobile the customer is so close, and can leave feedback so quickly, that a key testing skill is understanding them and using that understanding to drive testing. The book goes into plenty of detail in this area.

You’ll also find some very useful information on the areas that I typically get asked about by testers who are new to mobile; areas such as device fragmentation, hardware dependancies, using simulators and automation. The book is full of helpful hints and tricks, and is also a great reference source. There’s also a whole chapter on mobile test strategy that would help both test managers and testers who are new to mobile.

Automation is an area where mobile is relatively immature when compared with desktop, and I was pleased to see a whole chapter dedicated to the subject. If you are choosing a tool, there’s some great hints on how to go about making that decision, as well as information on the current tool landscape.

The book then concludes with sections on important skills for mobile testers and a look to the future of mobile. Some of the important skills explained are just as applicable to software testing in general, rather than mobile specifically, but the chapter does then get more mobile specific. It was also interesting to read Daniel’s thoughts on the future; the mobile world moves so quickly that what’s future now very rapidly becomes present, and already we’re seeing some of the future technology described being available for us to test (and buy).

Overall I found the book to be a really useful addition to my library. There are not enough books on mobile testing as this is certainly one I would recommend. It’s not just about mobile application testing either, there are sections on mobile web as well as information about the mobile world in general. Hopefully Daniel keeps updates coming via Leanpub to keep it current.

If you want to know more about mobile testing then I would certainly recommend that you take a look at the book.

You can get Hands-On Mobile App Testing from Leanpub.

Note: I was provided with a free reviewers copy of the book for the purpose of writing this review.